Week 5 (March 2/4): Deep Fakes

Lead: Team 4

Required Readings and Viewings (for everyone):

- Hany Farid, Creating, Weaponizing, and Detecting Deep Fakes. Keynote Talk, Spark + AI Summit 2020. (video, 18:57)

- Airen Petalbert, Deepfake Examples That Prove How Scary and Amusing This Technology Is, Tech Times, 30 December 2019.

- Danielle Citron, How deep fakes undermine trust and threaten democracy TED Talk. July 2019. (video, 13:09)

- Nathan Colaner and Michael J. Quinn, Deepfakes and the Value-Neutrality Thesis, 10 February 2020.

- Jessica Silbey and Woodrow Hartzog, The Upside of Deep Fakes, Maryland Law Review, 2019

Optional Additional Reading:

- Nina Brown, Deepfakes and the Weaponization of Disinformation, Virginia Journal of Law & Technology, 2020. (Brown’s paper provides specific recommendations on addressing deep fakes which are relevant to the discussion questions below.)

Response prompts:

Post a response to the readings that does at least one of these options by 10:59pm on Sunday, February 28 (Team 2 and Team 5) or 5:59pm on Monday, 1 March (Team 1 and Team 3):

- How can we prevent deep fakes from harming society? Responses can address this question from an education, technology, policy, perspectives.

- Is deep fake technology really the problem or is the real issue the vulnerabilities found in our education, media, and election systems?

- Is all technology value-neutral or is it only that certain technologies can be value-neutral? If all technology is value-neutral then who is responsible when a designer’s creation is misused? If only certain technologies are value-neutral, then should designers' ideas and creations be regulated in order to stop the production of harmful technologies?

- Respond to something in one of the readings/ videos that you found interesting or surprising.

- Identify something in one of the readings/videos that you disagree with or are skeptical about, and explain why.

- Respond constructively to something someone else posted.

Class Meetings

Lead by Team 4

Blog Summary

Team 2

2 March

We started off by filling out a survey that asked us about deep fakes. These questions included: Based on the readings/videos, do you think that the benefits of deep fake technology can outweigh the harms? Do you think that deep fakes are really the problem, or is the real issue the vulnerabilities found in our institutions (i.e. education, media, election systems)? After looking at the readings/videos, how confident do you feel in your ability to identify deep fakes (on a scale of 1-5)? What did you find most interesting in the readings/videos?

Our results indicated the following about our class’s opinions:

- Most of us think deep fakes can cause more harm than benefits

- About half the class thinks the problems that come from deep fakes come from the regulations that we’re lacking in our institutions. A quarter of our class thinks actual deep fake technology is the problem. The rest of the class thinks it is a mixture of both.

- Most of us are not confident in our ability to identify deep fakes (most of us chose 2 on a scale of 1-5).

To see a real example of deep fake technology being used, we watched Jordan Peele’s Barack Obama PSA video. Then, the presenter went over a brief summary of what deep fakes are and how they work. This group defined deep fakes as “fake media that has been edited using an algorithm to replace the person in the original video or image with someone else in a way that makes the post look authentic.” Deep fakes are made using generative adversarial networks. The presenter notes that deep fakes are not something that new, they are just a branch of photo manipulation using more advanced techniques (ML/AI techniques).

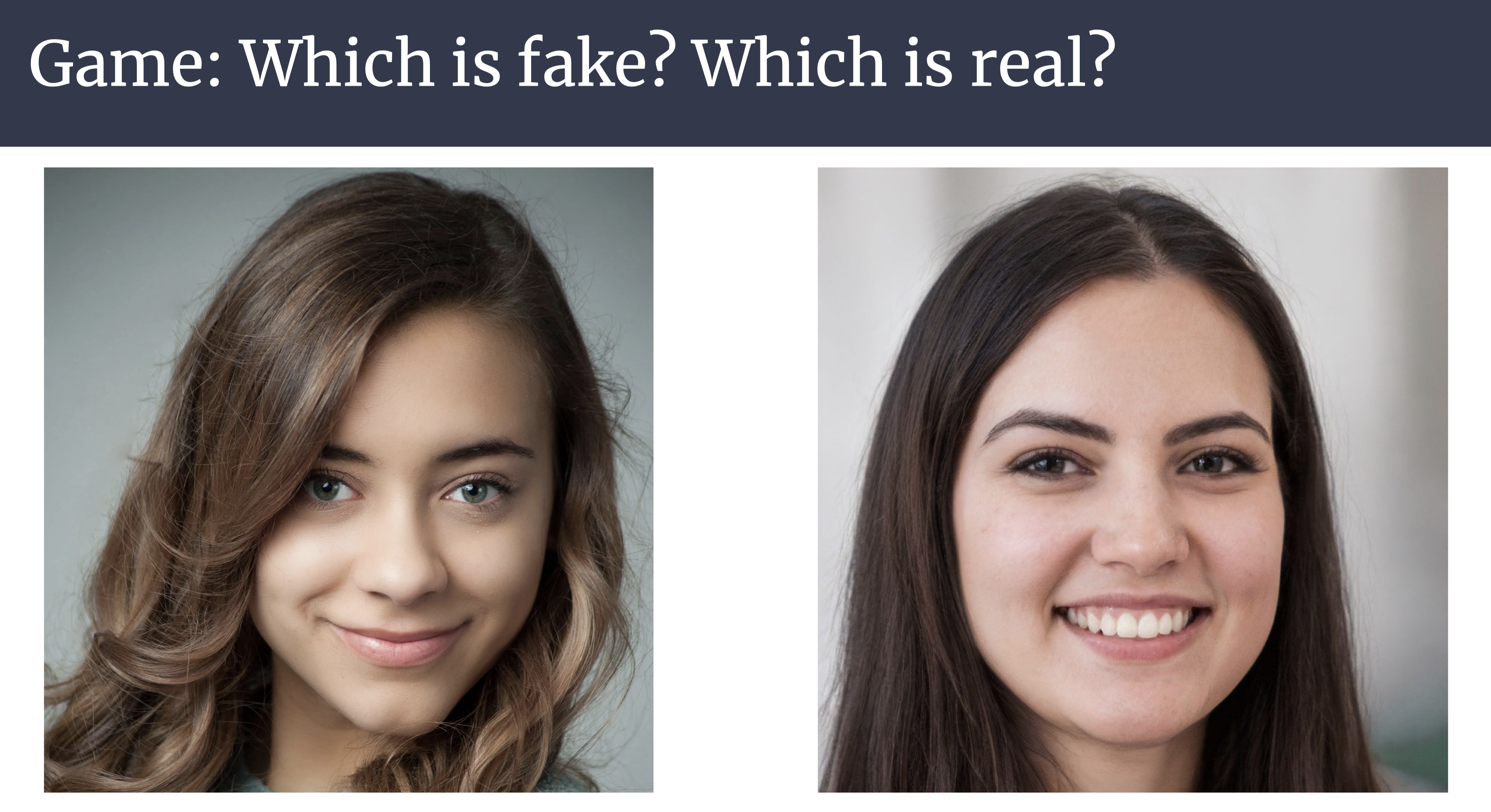

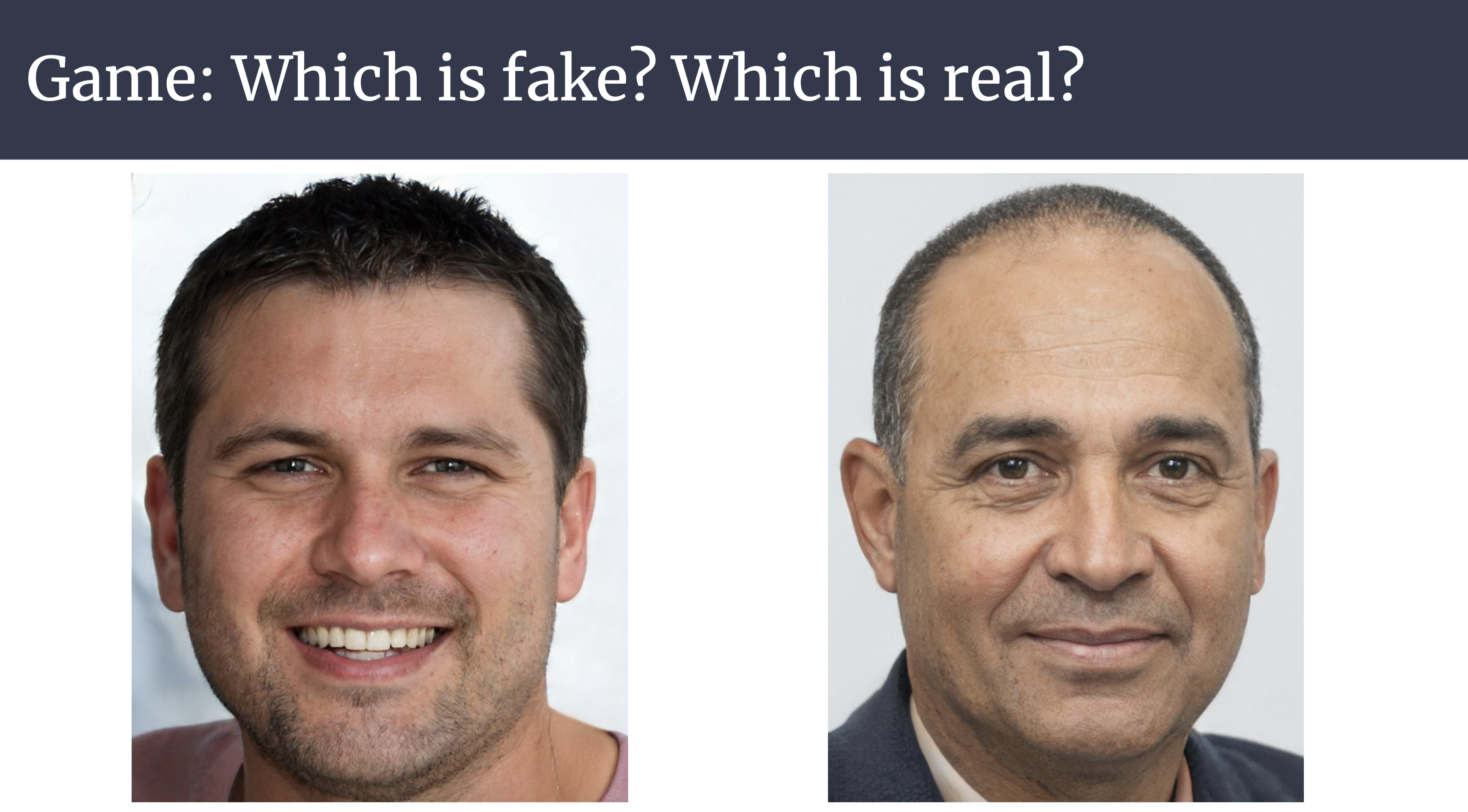

We played a game in which we were shown pictures of people and we had to identify which photo was real and which was fake. This example exemplified the difficulty in identifying fake photos, as there was never full consensus about which was the fake. When discussing these photos as a class, most people were divided and seemed to justify their choices with observing small details.

The main points in the video Creating, Weaponizing, and Detecting Deep Fakes consisted of how deep fakes are generated, types of deep fakes, and weaponizing deep fakes. The video also went over behavioral mannerisms to detect deep fakes. They use an example of how former President Obama tilts his head and frowns at the same time. In the video, he calls out social media platforms in their role in deep fake technology. In their algorithm for tracking and detecting deep fakes, they found people are consistent and distinct from each other, there is a bounding surface for every grouping of authentic videos, and there is high accuracy for classifying real and fake photos, but it is not 100% correct all the time.

In our small groups, we discussed the following questions: 1) Discuss with your groups and clarify your understanding of the technical aspects of deep fakes and 2) If you were developing software to generate deep fakes, how would you break deep fake detectors? This led to a more detailed explanation of how deep fake technology works, and the process taken in order to produce deep fake media. Suggestions for breaking deep fake technology included adding inputs to the doctored videos that would cause deep fake detectors to fail, leading to false negatives being able to pass the detectors.

Next, we reviewed the article from Tech Times, Deepfake examples that prove how scary and amusing this technology is. The article pointed to multiple examples of deep fake technology being used maliciously against public figures, including Donald Trump, Barack Obama, Nancy Pelosi, and Mark Zuckerberg. Greater detail was given for the deep fake video of Zuckerberg, where he “exposes'' Facebook’s actual intent, referencing the Spectre program used to collect data on its users and how they utilize it to “own” their users. The presenter noted the dangers of each example, and how these videos can alter the public’s perception of an individual.

In our small groups, we discussed the following questions: 1) What interventions and regulations should be put in place for this technology? and 2) With the knowledge that deep fake detectors could backfire if put into the wrong hands, who do you think should be allowed to have access to deep fake detectors? Responses included the balance of restricting misinformation while allowing expression. Another point brought up was if there is a way to verify or flag if the video is from the original source or if it is altered. A last point to keep in mind when thinking about regulating deep fakes is how to discern if a photo or video being edited is simply aesthetic or harmless purposes and if the photo or video can pose harmful consequences.

For our homework, we were assigned to read pages 32-37 of Deepfakes and the Weaponization of Disinformation by Nina Brown. We also had to find an example of a deep fake that is of good/neutral value. An example they showed us was the tweet about the deep fakes of Tom Cruise.

See the Week 5 Activity post comments for more examples.

Thursday 4 March

We started Thursday’s class with a funny video on deep fakes. The video featured members of the UVA computer science staff singing along to Big Time Rush. This was a cun video that showed the creative side of deep fakes. It served as a good introduction to the theme and dilemma of Thursday’s class– do the benefits of deep fakes outweigh the negative impacts and if not, how do we handle them moving forward?

After watching the video, we had a discussion about the assigned homework and shared various sites relevant to deep fakes. We found that general responses were examples concerning the film industry and nostalgia. One in particular was a website that traces your lineage, discovers photos of old family members, and animates them using deep fake technology: https://www.myheritage.com/family-tree?genealogy=1.

Team 5 then summarized the reading for the Colander and Quinn article on Value-Neutrality. Essentially, the authors conveyed that technology is neutral until they are used for either positive or negative purposes. This is what gives technology a positive or negative label. Nevertheless, this argument can be misused to dissolve the responsibility of developers for what they create. Deep fakes are an example of a technology that has great potential for bad purposes, and the authors argue that their potential harms must be outweighed by their potential benefits in order for deep fakes to be value-neutral.

Following this summary, the class discussed the following questions: (1) Do you agree or disagree with Colaner and Quinn that deep fakes are not value neutral? (2) Can you think of potential benefits that could outweigh the negatives they listed in the article?

We then proceeded to cover the TedTalk video by UVA Law Professor Danielle Citron. As a professor who teaches and writes about information privacy, free expression, and civil rights, she discusses the issues of deep fakes and the powerlessness of the legal system to help victims of deep fakes, like Indian journalist Rana Ayyub. She, like other famous, popular, and prominent figures in media, entertainment, and other areas was a target of non-consensual deep fake pornography.

It was noted that people use deep fake technology to escape their accountability, a concept called “The Liar’s Dividend” and one used to discredit individuals like Ayyub. This was a traumatic experience for Ayyub, but like Citron mentions, there is not much that the legal system could do to help her. They simply tell victims to turn their computers off and ignore the issue. Hence, Ayyub is a strong believer in legislation to ban harmful digital impersonations that are as bad as identity theft and that people must be educated on deep fakes. In addition, she also believes that social Media should change and enforce updated terms of service.

With a lack of legal support, it is important that attorneys and legal academics spend time understanding and evaluating how deep fakes might be handled moving forward. In the optional Nina Brown reading, she discusses the issue that combative tech can’t catch up to rapid development; however, she agrees that new legislation is necessary to address harm from deep fakes. She mentions that researchers are pushing to develop detection algorithms and digital forensics and that education and promoting media literacy, addressing threats are necessary in this fight for technological freedom vs. constitutional rights.

In small groups, the class proceeded to discuss 3 discussion questions: (1) Who benefits from photo/video manipulation technology? Who is harmed? Why? (1.a.) Companies like the film industry can benefit, especially if there was a death on set. Deep fake technology might help when finishing any scenes that a deceased person was supposed to be in. (2) How should we prevent deep fakes from causing harm to society and marginalized groups? Discuss different approaches from the perspective of technology, education, legislation, etc. (2) What (if any) actions should online platforms (Reddit, Twitter, YouTube, etc.) be taking to combat the spread of deep fakes on their sites? (2.a.) What are the ethical implications of choosing to remove or keep fake video and images on a large social platform?

Some of the responses from this discussion included the dilemmas and unethical rules with regards to companies. For example, social media profits off viral content, which becomes an issue when trying to implement restrictions on content. On a business level, Section 230 gives companies immunity. It states that “No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.” This becomes an issue when trying to restrict a company’s content.

Team 5 summarized the final reading, The Upside of Deep Fakes. The argument is that deep fakes can be the final push towards repairing fundamental institutions in our society. For example, they focus on media, education, and democratic institutions. To begin, the paper mentions that social media feeds are perfect for cultivating deep fakes and that it is important to invest in trusted journalism and reestablish norms of authentication and verification. Moreover, it is important to empower advocacy organizations. The authors then proceed to discuss the role of deep fakes on education and democratic institutions, highlighting that the fakes should be debunked in schools and that there need to be greater measures taken to avoid the sway of deep fakes on elections.

To close, we discussed the following questions: (1) Do you agree with the Upside of Deep Fakes article? Do deep fakes only accentuate old/deep problems without creating new ones? (2) Knowing the harm and upsides of deep fakes, what actions should be taken now? One common opinion for question 1 was that deep fakes do both. One example was that they accentuate slander and libel, which are both old constitutional issues; however, deep fakes provide a lasting, visual version of libel. Moreover, it is more difficult to track the producers of harmful deep fakes. Even though it may prove difficult to enforce, especially on an international level, many classmates believe that some form of law should be designated for abusing deep fake technology.